ChatGPT is trained on a large set of data but what if you want it to answer questions about your own data, for example building a chatbot that answers questions about your knowledge base? The answer is embeddings and in this blog post I explain the underlying mechanisms and how to implement them. The code can be found here

Large Language Models (LLM) are trained on vast amounts of data but what if you have questions about data that is not in the training dataset? The answers are not usable in most cases or even worse, sometimes the model even “hallucinates” answers. The simple solution is to provide the model with the full context relevant to your question. This works if you have only small amounts of data, but you can’t just include a whole database with every query.

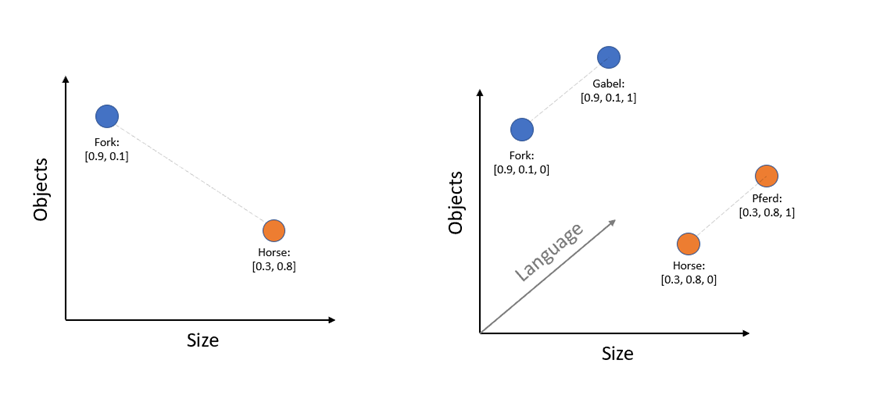

So what are embeddings? Embeddings are a way of representing a word (or parts of a word, a so-called “Token”) in multidimensional space. The resulting vector is how a large language model “sees” and groups together words. As a (very) simplified example, let’s look at the word “Horse” in a two-dimensional space with the Dimensions “Objects” and “Size”, you would find this word embedded somewhere along the X and Y axis: If we now add the word “Fork”, we expect it to be far away from the word horse in both dimensions. Adding an additional dimension for Language and putting in the German translations of these words “Pferd” and “Gabel”, we expect them to be very close in the dimensions “Objects” and “Size” but further away in the Dimension “Language”.

Again, this is a very simplified example with randomly chosen words, dimensions and values. In reality, there are much more dimensions that are much less understandable to us. But the same concept applies: the distance between two points can be used to infer similarities between words and therefore give a measure of “relevance” when comparing in a certain context.

Okay now why is this useful to answer questions about my own data? When working with the OpenAI API you can include only a limited number of words in a query. This means that you can’t just put all your data in the question. This is called the “Token Limit”. You have to make sure to only include the most relevant information to answer the question. Thus, resulting the following workflow:

- Split your data into smaller chunks.

- Get the embeddings of these text-chunks.

- Store the embeddings together with the corresponding text.

- Define your question and get the embeddings of your question.

- Compare the embeddings of your question with the embeddings stored in step 3.

- Retrieve the most relevant text-chunks in the context of your question based on distance.

- Combine the question and the relevant text chunks in one query.

- Send the full query to ChatGPT to answer your question.

Example

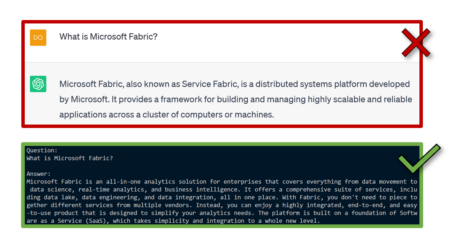

Microsoft just unveiled Microsoft Fabric. As it was just recently announced, ChatGPT doesn’t have knowledge about this product yet, because it was not in the training data set of the model:

This is not the answer we are looking for (But it might hint at some ambiguity regarding product names at Microsoft).

Implementing the previously mentioned workflow with the new Microsoft Fabric documentation provided by Microsoft and asking ChatGPT again via an API call yields these results:

Question: What is Microsoft Fabric?

Answer: Microsoft Fabric is an all-in-one analytics solution for enterprises that covers everything from data movement to data science, real-time analytics, and business intelligence. It offers a comprehensive suite of services, including data lake, data engineering, and data integration, all in one place. With Fabric, you don’t need to piece together different services from multiple vendors. Instead, you can enjoy a highly integrated, end-to-end, and easy-to-use product that is designed to simplify your analytics needs. The platform is built on a foundation of Software as a Service (SaaS), which takes simplicity and integration to a whole new level.

Question: What are the components of Microsoft Fabric?

Answer: Microsoft Fabric is a unified platform that offers a comprehensive suite of services, including data lake, data engineering, and data integration, all in one place. The platform is built on a foundation of Software as a Service (SaaS), which takes simplicity and integration to a whole new level. Microsoft Fabric brings together new and existing components from Power BI, Azure Synapse, and Azure Data Explorer into a single integrated environment. These components are then presented in various customized user experiences. The components of Microsoft Fabric include Data Engineering, Data Factory, Data Science, Data Warehouse, Real-Time Analytics, and Power BI. Additionally, Microsoft Fabric includes OneLake, which is a unified location to store all organizational data where the experiences operate.

Question: Tell me about the OneLake

Answer: OneLake is a unified storage system for all developers that provides a single SaaS experience and a tenant-wide store for data that serves both professional and citizen developers. It eliminates the need for users to understand any infrastructure concepts such as resource groups, RBAC (Role-Based Access Control), Azure Resource Manager, redundancy, or regions. OneLake is built on top of ADLS (Azure Data Lake Storage) Gen2 and is hierarchical in nature to simplify management across an organization. It provides a single-pane-of-glass file-system namespace that spans across users, regions, and even clouds. OneLake is built into the Microsoft Fabric service and is the foundation on which all the Fabric services are built.

Conclusion

Embeddings are a great way to allow the latest large language models to work with your own data. With the newer models like GPT-4, which have a much larger token limit, the possibilities of having a tailored and useful assistant are definitely becoming reality. Of course, the provided example is only a demo case. For a fully-fledged solution there is more to do. A few points to improve:

- Implementing a vector database to ensure performant vector search and comparison across large amounts of data and embeddings.

- Finetuning of the system string, chunk size and token budget management to enable more precise answers.

- Preprocessing like summarization or text extraction to ensure the most relevant content when comparing the embeddings.

- Chaining multiple tasks in multiple queries. For example, a dedicated summarization step in a separate query and only using the summarized text to further decrease the tokens used in a single question.

Want to try it out yourself? Create a free OpenAI trial here and check out the code used for this blog

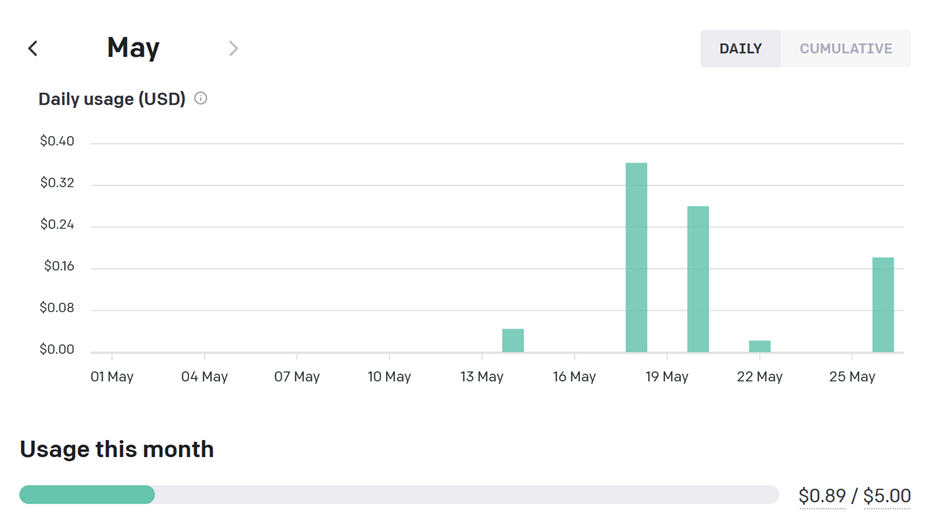

If you are wondering about the cost, here is an overview of the past few days working with embeddings.