In a recent project, I tried to copy data from a SharePoint site to an Azure Blob Storage using Azure Data Factory (Copy files from SharePoint to Blob Storage using Azure Data Factory – Syntera). The goal was to enable employees to upload their expense receipts to their SharePoint site using OneDrive’s built-in scan function. Using Azure Data Factory these receipts should then get copied into an Azure Blob Storage for further processing, utilizing different services of the Azure cloud. From a structural perspective, each employee needs his/her own folder and only the corresponding person has permissions to upload files into the folder. Translated into technical terms the implications are: I have to loop over all subfolders (each of them corresponding to an employee) and then loop through each file inside the subfolders. Seems like a pretty simple task, since nested loops are a quite common feature in ETL tools right? … right? Unfortunately, the answer is no, Azure Data Factory does not allow nested loops. The workaround is to execute another pipeline from within the ForEach loop whereby the executed childpipeline is then allowed to hold another loop. In the following step-by-step guide, I extended my previous solution, enabling it to copy multiple files from multiple folders.

The Solution

Let’s first have a look at the final pipeline in Azure Data Factory and break it down into individual tasks to solve:

Prerequisite:

- Register SharePoint (SPO) application in Azure Active Directory (AAD).

- Grant SPO site permission to a registered application in AAD.

- Provision ResourceGroup, Azure Data Factory (ADF) and Azure Data Lake Storage (ADLS) in your Azure Subscription.

All these steps are explained in detail in the previous blog: Copy files from SharePoint to Blob Storage using Azure Data Factory – Syntera

Inside of ADF main pipeline:

- WebActivity “GetBearerToken”: Get an access token from SPO via API call.

- WebActivity “GetSPOMainFolderMetadata”: Get SPO mainfolder metadata including a list of all subfolders using the SPO access token via API call.

- Execute Pipeline inside ForEach loop: Iterate through subfolders in list of subfolders and for each execute the child pipeline

Inside of ADF child pipeline:

- WebActivity “GetBearerToken”: Get an access token from SPO via API call.

- WebActivity “GetSPOSubFolderMetadata”: Get SPO subfolder metadata including a list of all files in the SPO target folder using the SPO access token via API call.

- CopyActivity “Copy data from SPO to ADLS” inside ForEach loop: Iterate through files in list of files and copy each file to ADLS.

In my previous blog, I covered all the steps to loop through subfolders and copy all files in them to a Blob Storage. There is one small adjustment due to executing the pipeline from within another pipeline. This change will be addressed in this blog. If you are interested in a step-by-step guide of that part, please refer to my previous blog.

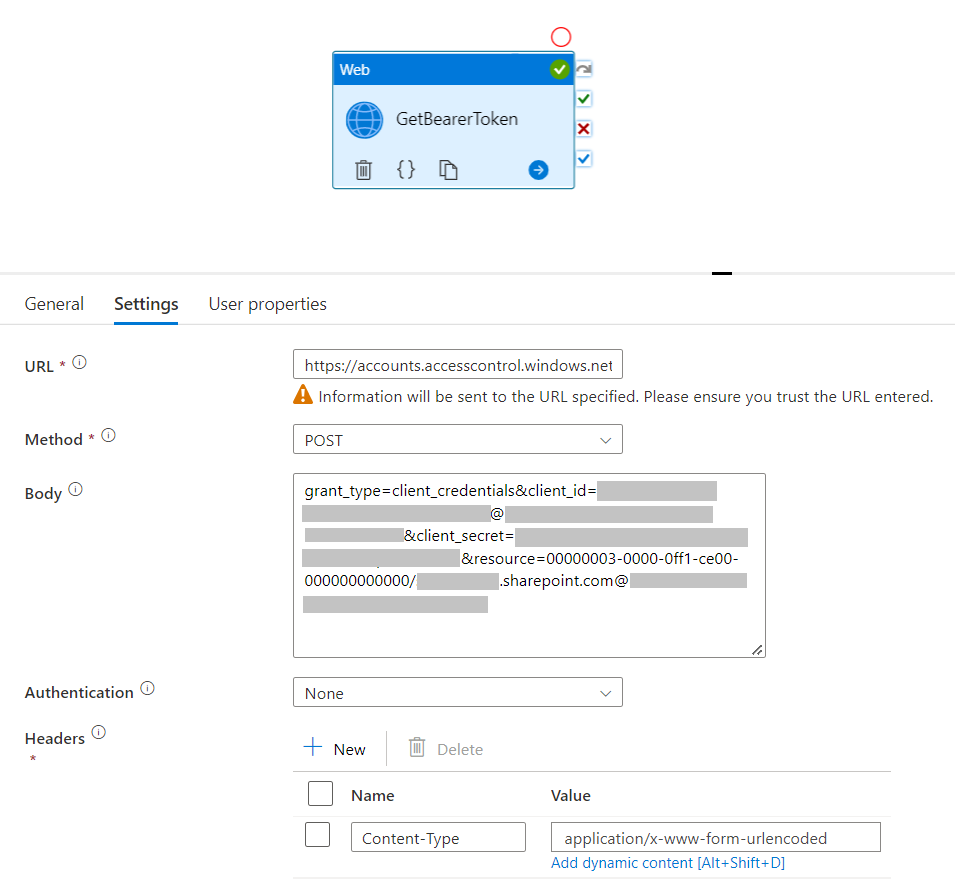

1. Create Pipeline in ADF and get access token from SPO

- Open ADF Studio

- In Azure portal under Navigate select Resource groups and click on the resource group you created

- Inside your resource group, you should see the ADF and ADLS resources we created previously

- After selecting your ADF resource under Getting started you should see Open Azure Data Factory Studio, which will open a new tab

- Create a new pipeline

- Under Author right-click Pipelines and select New pipeline

- Create a Web activity

- Under General select Web and drag it to your pipeline

- Under General choose a suitable name (in my case: GetBearerToken)

- In Settings enter the following information:

- URL:

https://accounts.accesscontrol.windows.net/[Tenant-ID]/tokens/OAuth/2- replace

[Tenant-ID]with the Directory (tenant) ID from your registered app

- replace

- Method: POST

- Authentication: None

- Headers:

- Name: Content-Type

- Value:

application/x-www-form-urlencoded

- Body:

grant_type=client_credentials&client_id=[Client-ID]@[Tenant-ID]&client_secret=[Client-Secret]&resource=00000003-0000-0ff1-ce00-000000000000/[Tenant-Name].sharepoint.com@[Tenant-ID]- replace

[Tenant-ID]with the Directory (tenant) ID from your registered app - replace

[Client-ID]with the Application (client) ID from your registered app - replace

[Client-Secret]with the value of the generated secret from your registered app - replace

[Tenant-Name]with the SPO domain name (in my case: syntera)

- replace

- URL:

- Select Debug to check if everything is set up correctly (check the output of the activity to see the access token)

- On General check the box for Secure output to ensure that your access tokens don’t get logged in your pipeline runs

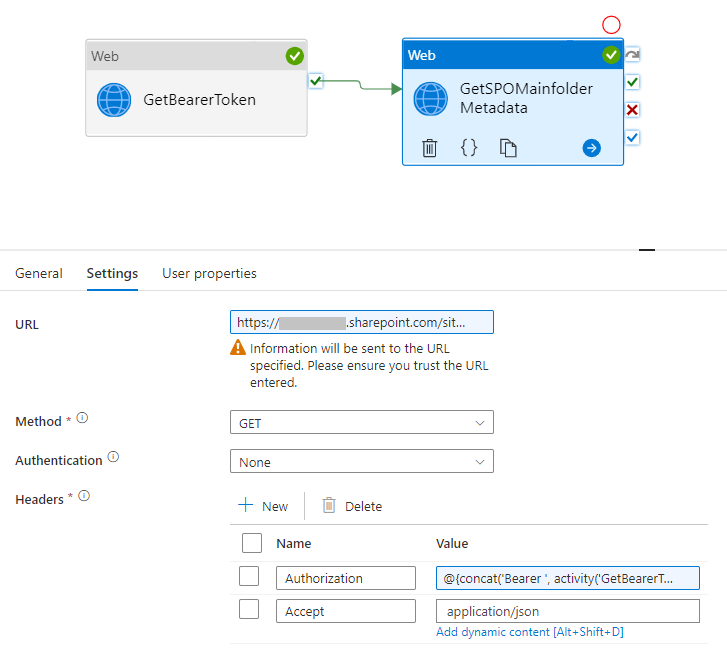

2. Get SPO folder metadata (list of subfolders)

Now that we can retrieve access tokens from SPO we are able to get information from the SPO tenant. In order to copy multiple files from multiple folders we first have to get a list of all subfolders currently located in the mainfolder. To receive this list, we will use an API function from SPO which returns the metadata of a specified folder:

- Add another Web activity and connect it with the previous one

- Under General choose a suitable name (in my case: GetSPOMainfolderMetadata) and check the box for secure input (again to ensure the access token does not get logged in your pipeline runs)

- Under Settings put in the following information:

- URL:

https://[sharepoint-domain-name].sharepoint.com/sites/[sharepoint-site]/_api/web/GetFolderByServerRelativeUrl('/sites/[sharepoint-site]/[relative-path-to-folder]')/Folders- replace

[sharepoint-domain-name]with your SPO domain name (in my case: syntera) - replace

[sharepoint-site]with your SPO site name (in my case: blog) - replace

[relative-path-to-file]with the relative URL of your folder (you can check the folder path in SPO by right-clicking on the folder, selecting Details and selecting More details in the bottom of the window on the right)

- replace

- Method: GET

- Authentication: None

- Headers (there are two headers):

- Name1: Authorization

- Value1:

@{concat('Bearer ', activity('GetBearerToken').output.access_token)}- Replace GetBearerToken with the name you gave to the first web activity

- Name2: Accept

- Value2:

application/json

- URL:

- Select Debug to check if everything is set up correctly (check the output of the activity to see the folder metadata)

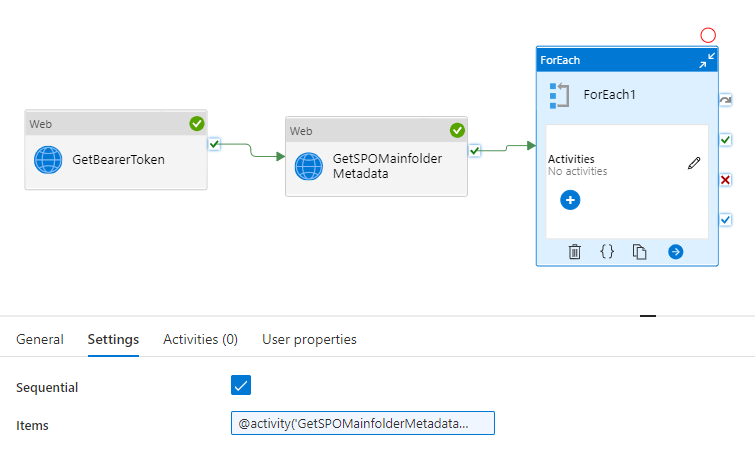

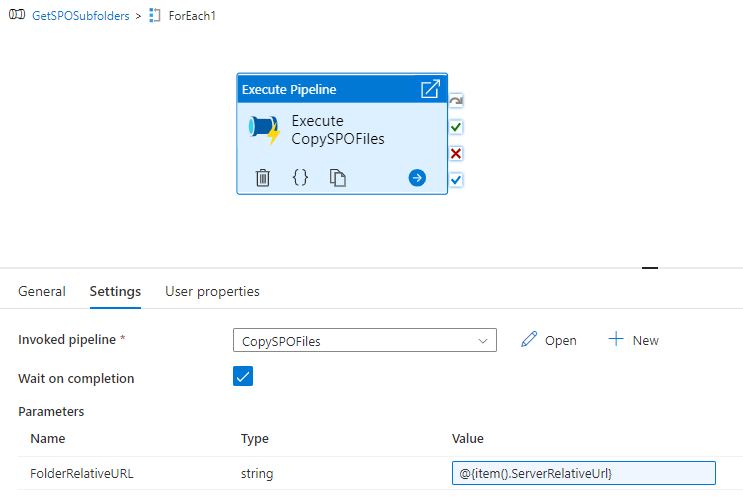

3. Iterate through list of SPO subfolders and for each trigger childpipeline

With the list of subfolders, we received as an output of the “GetSPOMainfolderMetadata” web activity, we can now setup the execution of the childpipeline. For this task we need a ForEach activity to loop through every subfolder in the list and trigger the childpipeline run. To hand over each subfolder URL to the childpipeline we need to introduce a pipeline parameter to provide the corresponding value and use it in the childpipeline run.

- Add a ForEach activity and connect it with the previous web activity

- Under Settings check the box Sequential and in Items enter the following:

@activity('GetSPOMainfolderMetadata').output.value- Replace GetSPOFolderMetadata with the name you chose for the second web activity

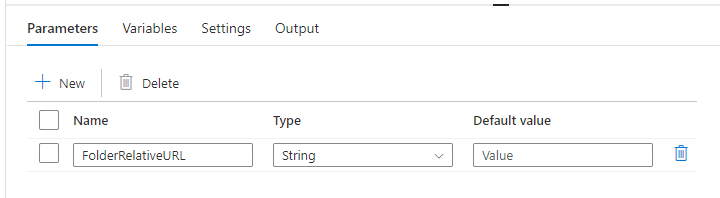

- Create a new pipeline, rename it (in my case: CopySPOFiles) and under Parameters create a new parameter named “FolderRelativeURL”. This parameter will be used to hand over the relative URL path of the subfolder to the childpipeline.

- Inside the ForEach activity add an Execute pipeline activity and rename it (in my case: Execute CopySPOFiles)

- In Settings under put in the following information:

- In Invoked pipeline select your childpipeline

- Under Parameters note that the parameter “FolderRelativeURL” appears, which we created in the childpipeline and set the value to:

@{item().ServerRelativeUrl}- This references the ServerRelativeUrl field in the .json received from the GetSPOMainfolderMetadata

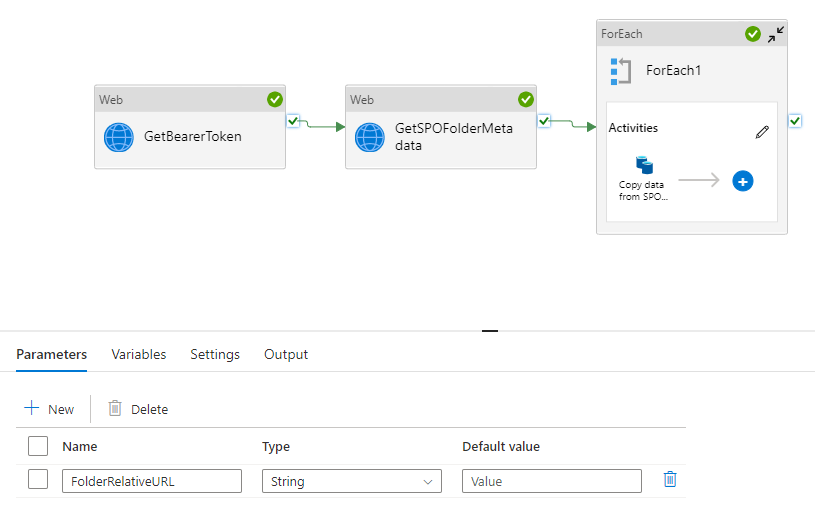

Setup for childpipeline “CopySPOFiles”

We now have a working pipeline, which can extract all subfolders of a mainfolder and for each subfolder executes a childpipeline. In my case, the childpipeline is set up to loop through all files in the subfolder and copy them to a Blob Storage. If you want to know how to do that you can follow along my previous blog (Copy files from SharePoint to Blob Storage using Azure Data Factory – Syntera). The following pipeline is the result of the said blog and if you follow along you should end up with the same picture. The only difference is the parameter FolderRelativeURL, which we created in the last step.

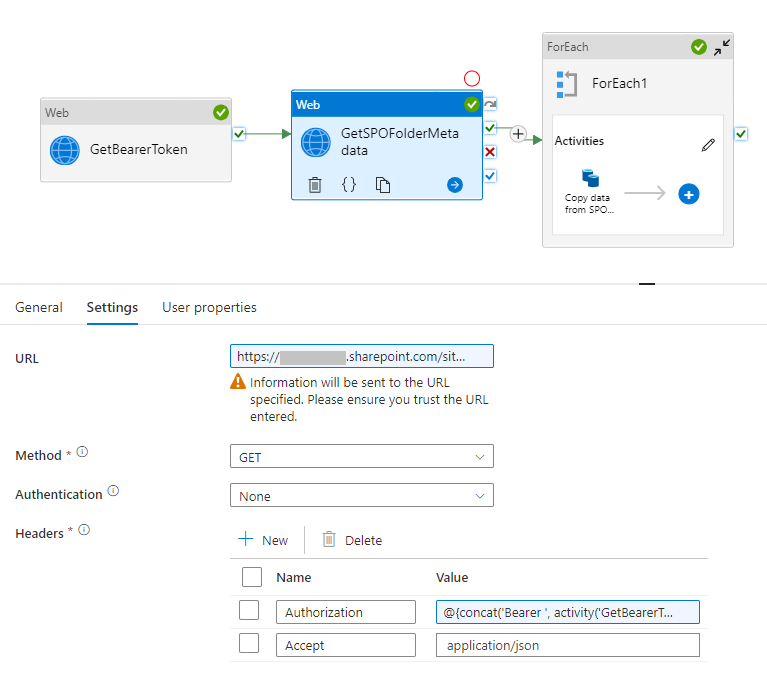

Enabling the childpipeline to use the dynamically extracted subfolders from the mainpipeline requires one last step. We need to pass the FolderRelativeURL parameter received from the mainpipeline to the “GetSPOFolderMetadata”. For this purpose the URL called within the “GetSPOFolderMetadata” activity needs to be changed to the following:

- URL:

https://[sharepoint-domain-name].sharepoint.com/sites/[sharepoint-site]/_api/web/GetFolderByServerRelativeUrl('@{pipeline().parameters.FolderRelativeURL')/Files- replace

[sharepoint-domain-name]with your SPO domain name (in my case: syntera) - replace

[sharepoint-site]with your SPO site name (in my case: blog) @{pipeline().parameters.FolderRelativeURL

- replace

Unfortunately, there is no way around requesting a new BearerToken in the childpipeline, since handing over the token from the mainpipeline would result in logging the token in the pipeline runs. There is currently no possibility to secure parameters exchanged between pipelines in the same way as it is possible to secure outputs between activities.

12 responses to “Nested ForEach loops in Azure Data Factory”

I am trying this method to copy 500 gb of data from SPO to Azure files. And metain metadata and folder structure. but i followed every step in your guid, but i am not able to get this method to work. Can you help me? i am getting itemscount 0 at the last activity

Hi Roy

For a better understanding: Do you get the itemscount 0 in the last activity of the childpipeline (where the files are copied) or in the mainpipeline (where we iterates through the folders)?

For Each activities iterate through a list of items. Itemscount 0 implies that either your list is empty (prior get metadata activity did not return any objects) or the reference to your list of items in the for each activity is not set up correctly.

Can you please check the output of the prior get metadata activity and confirm that you have at least one item (1-n entries under “value” in the json output)

Please, I am using Excel and not binary, so I cannot see the dataset properties. Do you have any suggestions!

Hi Rita,

The reason you can’t see the dataset properties has nothing to do with your datasource type but rather with the fact that you did not setup any parameters in your dataset that would show up as dataset properties in the copy data activity.

Please have a look at 6.5 in the previous blog that this blog is based on to see how to setup the necessary dataset parameters (https://www.syntera.ch/blog/2022/10/10/copy-files-from-sharepoint-to-blob-storage-using-azure-data-factory/)

Been getting the below error;

ErrorCode=HttpRequestFailedWithClientError,’Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=Http request failed with client error, status code 403 Forbidden, please check your activity settings. If you configured a baseUrl that includes path, please make sure it ends with ‘/’.

I’ve checked the baseURL and it ends with ‘/’. The baseURL ends with: /sites/RoyTestSPO/_api/web/GetFileByServerRelativeUrl/(‘@{linkedService().RelativeURL}’/)/$value. Please help me with this issue

Hi Rec

Microsofts error messages are sometimes not very helpful / missleading. I received the same error message when I built the solution, after a lot of debugging it turned out that it was a permission problem. Most likely there is problem with the URL but the registered app in Azure AD probably has no permission to the sharepoint site.

Can you check if you set up everything accordingly to “2. Grant SPO site permission to registered application in AAD” in the Blog?

Hi Dimitri,

i’ve checked the permissions and it is all setup as you described. But it still doesn’t work.

When looking at your baseURL: /sites/RoyTestSPO/_api/web/GetFileByServerRelativeUrl/(‘@{linkedService().RelativeURL}’/)/$value I have noticed an additional “/” after the reference to the linked service (before “/$value”). Can you try it again without the additional “/”?

Can you add if we want to copy the latest modified files from sharepoint to blob?

Hi Rakesh

Unfortunately, this requirement is not easy to implement. The pipeline is intended for the one-time transfer of documents or as a scheduled trigger, which can be run daily, for example.

As far as I can tell, I see two possible approaches. You could filter the metadata list of the folder using the maximum last update date you can find in the list. But I assume your requirement is to trigger the transfer to the blob storage when a file is uploaded or modified. In that case I would use Power Automate or Azure Logic App to trigger your Data Factory Pipeline, since this tools have a built in trigger for modified or changed files on Sharepoint that you can use.

Hello Dimitri,

Thank you for this blog and it is of great help. I am new to Data Factory, learning things and figuring out a way to copy csv file from share point.

The folder structure I have is something like

*Share point Site*

– Main Folder (Relative URL)

– F1 (contains multiple folders (F1, F2,..,F10))

– F1 Sub Folder 2

– F1 Sub Folder 3

– F1 Sub Folder 4

– .csv File to copy to ADLS Storage

I am able to get the Meta data from the Main folder consisting of Sub Folder 1 and build a child pipeline to show the metadata on the sub folder 2 but I am unable to figure out a iteration through the other sub folders until the end of sub folder 4 to copy the .csv files.

Does this require creating multiple child pipelines with folder metadata and then executing the extraction of csv file?

Is there any other way to reach to the final folder and copy the files? Also, Is there a option to copy all the folders, sub folders and files right from the Main Folder?

Hi Hugh

As stated in the blog sadly there is no easy solution to accomplish nested for loops in Azure Data Factory. Your setup requires multiple nested loops to work, so yes you would need to create a new child pipeline for each hirarchy level of your folder structure.

Maybe there is a way to get the metadata of all files regardles of the hirarchie in your folder structure (check out the proposed solution in this forum: https://sharepoint.stackexchange.com/questions/138135/get-all-files-and-folders-in-one-call)